Perhaps the most important ingredient in the success of a software development team is the raw talent of its team members. This is supported by the so called 10x rule, which states that the strongest developer is about 10 times as productive as the weakest. This huge variation in productivity has long been observed among development managers and is also backed by a significant body of research [1].

The 10x rule supports the view that no other decision is as important as who you hire. While you can nurture talent and help the good become great, transforming poor performers into coding rock stars is completely impractical in a development team with deadlines and deliverables. And all development teams have deadlines and deliverables.

The 10x rule also supports the view that you have to filter out many of those on the low end of the 10x spectrum to find the gems, or even the 5x supporting players. How can this be done? One of the best ways to know if someone has mastered a skill is to have them perform it and evaluate the results. If you need a musician, there’s the audition. If you need a chief, there’s t he taste test. If you a need a software developer, there’s the coding test.

Coding tests are nothing new and have many variations: simple paper exercises, whiteboard algorithm problems, or mini development projects that can be completed in an hour. More recently, startups like HackerRank, TestDome, and Codility offer cloud-based testing solutions that have the potential to make pre-interview testing faster, easier, and more effective.

This post reviews Codility from the CTO or software development manager’s standpoint. It’s based on my experience using Codility to evaluate the performance of a software engineering team I’d been brought in to manage, improve, and expand while CTO of a large digital marketing company. I began by testing existing team members and then tested prospective new hires.

Codility is a cloud-based developer evaluation tool that:

- Allows development managers to create online coding tests in most popular languages by combining carefully designed standardized programming tasks.

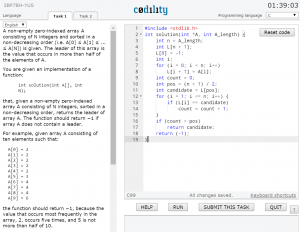

- Provides developers with an online test environment to read the task, code a solution, and run that solution much as they would in a standard code editor.

- Automatically evaluates test session results for correctness and performance against multiple test cases.

- Compares results with those of thousands of other test-takers that have completed the same test.

- Reports test session results to the development manager and optionally provides feedback to the developer.

Ten years ago, Greg (Grzegorz) Jakacki was facing a problem that many development managers struggle with: only a small proportion of applicants for programming jobs could actually program to a level even close to what he required. Using principles inspired by coding competitions like the International Olympiad in Informatics [2], where he had won a bronze medal, Greg and his colleagues developed automated testing software that filtered out 90% of applicants, allowing Greg to focus his interviews on the better skilled developers who passed the test.

Greg then pitched the idea of a web based version of his software at the 2009 Seedcamp competition in London. He won, and Codility was born. Today, Codility has offices in London, Warsaw, and San Francisco that support 200 corporate customers in over 120 countries. Since 2009, Codility has run over 2 million tests, which provides a significant dataset with which to compare new test takers.

Codility is primarily used as a pre-interview test for software developers. It can also be used to evaluate performance of an existing software development team in order to facilitate professional development or restructuring.

For Developer Recruitment. Codility is not intended to be a one stop evaluation tool. Rather, it filters out poorly performing developers so the hiring manager can focus on strong candidates. Additional interviews and evaluations are required. However, Codility can greatly reduce the number of interviews you must perform to build your team, it’s not uncommon to filter out 80% to 90% of applicants in the initial testing. And because you can compare a candidate’s performance to thousands of developers worldwide, test results are more meaningful than if you can only compare to the developers within your organization.

For Development Team Restructuring. Imagine that you’ve been brought in to fix a poorly performing software team. You find issues with product definition, development process, team tooling, etc. The team may also have some underperforming members who are reducing overall productivity and impacting moral. But, given the less than optimal work conditions, it’s hard to be sure if they simply don’t have the support they need to thrive, or if they don’t have the raw talent. Testing all team members can help you understand the team’s talent landscape and take action to improve it. It also helps you understand how test scores translate into actual developer quality and productivity, providing a meaningful metric for new candidates.

Criticisms. The primary criticism of Codility and controlled testing of software developers in general is that the tests are too academic and do not measure what’s needed to succeed as a real life developer. In real life there is teamwork, Internet resources, and time to mull things over. In real life you might do it differently than what you learned in school, as long as it works and works on time.

While all this is true, it does not diminish the value of testing. Remember that the purpose of testing is simply to create a short list of candidates to interview and further evaluate, not to rank all candidates. While a candidate with a score of 85% may or may not be a better choice than one with a score of 75%, ones that do not pass at all are almost certainly a bad choice. They are unlikely to contribute more to the team then they require from it, so even if they are nice people, they will not be good team members.

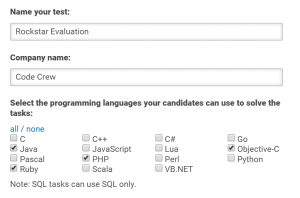

I’ll walk you through the basics steps in using Codility, and later give you some pointers of how to make it work for you.

- Name your test and select the language or languages you want in which developers will code. Choose any combination of C, C++, C#, Go, Java, JavaScript, Lua, Objective-C, Pascal, PHP, Perl, Python, Ruby, Scala, and VB.NET. If you choose more than one language, the test takers can select whichever they prefer.

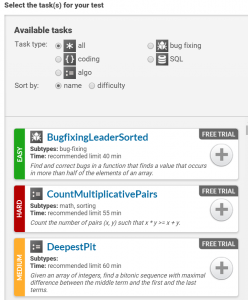

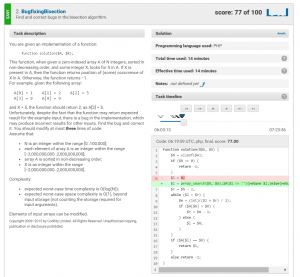

- Select a few tasks for the test. Three is the most common number for a test, since it allows you to vary the type of tasks without exhausting the test taker. Tests are categorized by difficulty (Easy, Medium, Hard) and type (coding, algorithm, bug fixing, and SQL). Each task typically requires the developer to write or modify a short program (usually 10–20 lines).

- Administer tests sessions. These can be done onsite or emailed to the test taker for offsite completion. The test-taker reads the problem and initial code, then works in the provided online editor (or optionally in their preferred editor, pasting it in when complete). They run the code and if it compiles and provides the expected result for the example test case, the system says “OK.” However, in order to get a 100% score, the code must run correctly and efficiently against all possible inputs. Test takers can run their solution as many times as required and submit the task when they are happy with their code.

- Review task results. You’ll be notified when the test is complete and can then review the score for each task and see the actual code submitted as well as the total time spent working on it. You can run a screen-cast video of the code as it was typed to get an idea of how the developer arrived at the solution.

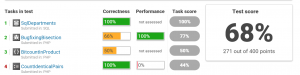

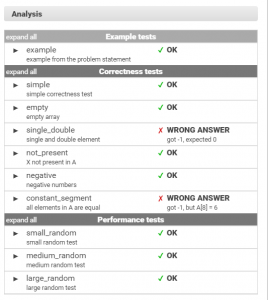

You can also see the pass/fail results of each correctness and performance test:

- Review test results. Each task is graded for correctness, and some for code performance. These task scores are averaged to produce a composite test score.

- Compare results to all test takers. What does the test result mean? You can compare individual task scores with the scores of all test takers, usually thousands of developers. The results are displayed when you select a task. Some tasks are very easy, for good developers they are more of a warmup round. For example, 73% of test takers got 50% or more on the SqlDepartments task, while 71% got 100%.

Other tests are much more difficult with almost everyone failing:

Here are some pointers to get the most from Codility:

- Try the free trial to help you understand how Codility works and what the tests are like. The trial allows you to test up to 50 candidates in a 14-day period, but unfortunately you can only draw from a pool of six tasks to develop your tests. I found this to be insufficient for testing existing team members and new recruits. Additionally, since anyone can sign up for the trial without paying, developers could practise the six trial tasks before you test them. Therefore, I suggest using the free trial only for an initial walk through and for a complete evaluation pay for a month’s subscription.

- Test your existing team to better understand its strengths and weaknesses and to provide a metric against which to measure new candidates. You’ll want new recruits to meet or exceed test scores of your top developers.

- Select relevant test tasks. Test tasks should be similar to the kind of work your team does. This ensures the testing is relevant and not simply an academic exercise. A typical test will include two to four tasks and last an hour or two maximum.

- Blend test task difficulty. Test task results on the easiest or hardest end of the spectrum are somewhat binary, with almost everyone passing the easiest tasks and almost everyone failing the hardest ones. If you choose only these, most test-takers will have the same score which will not help you choose between them. Instead, use published results to select tasks at varying levels of difficulty which can discriminate between varying talent levels. Getting the right combinations of tasks may take a few iterations of testing with your team.

- Encourage test-takers to try a demo test. Codility publishes lessons, challenges, and demo tests for developers. Taking a demo test and reviewing the developer FAQs is a great way for developers to get comfortable with the test environment and process. This makes for more accurate test results since test anxiety will result in scores that do not reflect the test taker’s full potential.

- Review the results. Compare task scores with Codility’s published results and against your team’s scores (if you’re testing new hires). For a more detailed understanding review the analysis section to see which test cases passed or failed and to review the code. You can even play a screen video of the code as it was typed to understand how the developer arrived at the solution.

- Passing the test is important, the exact score is not. The exact passing score you choose will vary with the position, the candidate pool, and the tasks selected. Run the same test with your team and come up with a passing threshold which you can adjust up or down if required, depending on candidate performance. For borderline test results you can review the code to make a final decision. While a minimum level of competency is vital, slight variations above the threshold may or may not be meaningful. The best developer may not be the one with the highest score, so move to other evaluation methods like the interview to determine suitability before making job offers.

- Provide feedback, or not. Codility can optionally share complete test results with test takers. If you’re testing your team, I recommend sharing the results with individual team members and perhaps discussing them in a one-on-one. However, I don’t like sharing more than the test score with the external candidates since the detailed feedback could be shared with future test takers and give them an unfair advantage.

- Test onsite if possible. I prefer to test onsite to ensure test takers don’t get help from a more experienced or competent friend. I’ll make an exception if a candidate is out of town, in which case a simple phone conversation about the coding choices they made during the test will reveal if the code submitted was theirs. Allowing online references can be OK, since time limits will prevent a test taker from using them for more than a supporting resource.

- Answer questions about the tasks. I’ll make a senior developer available to answer questions during the test. Obviously, questions like “how do you solve this problem” will not be answered, but those that help the test-taker understand what is being asked of them are OK. This is especially important when testing developers who are not native English speakers.

- Free Trial: Up to 50 tests during 14-days created from 6 tasks.

- Startup Subscription: 199 USD monthly for up to 30 tests created from 86 tasks.

- Enterprise Subscription: Custom pricing for unlimited tests created from 136 tasks

- Per Test Package: Starts at 17.50 USD per test with discounts for volume.

- Discounts: One time 50% discount for startups that purchase a Per Test Package.

Used as the first step in a comprehensive strategy, Codility can increase both efficiency and effectiveness of your organization’s software developer talent evaluation. Given the importance of talent acquisition in a software team’s success, using it to find those 10x developers is a smart choice.

- Productivity Variations Among Software Developers and Teams: The Origin of 10x. Steve McConnell, March 27, 2008

- Application of Olympiad-Style Code Assessment to Pre-Hire Screening of Programmers, Olympiads in Informatics, Grzegorz Jakack, Marcin Kubica, & Tomasz Walen, 2011, Vol. 5, 32–43<